A New Sound

It doesn’t take a music historian to tell the difference between an old song and a new one. Whether the lyrics or the tune are recognizable or not, the quality of sound is a dead giveaway. Recently however, the evolution of music technology has widened this gap in quality of music, making songs from even a decade ago sound outdated.

When it comes to older music, there is a distinct shallowness of sound, a static quality that makes it sound like the music is being played over the phone. This sound quality was always reserved for truly older music, or at the very least for songs older than 2000’s babies. This is simply not the case any longer, which can largely be attributed to the development of digital recording systems.

“What’s happened in the last 10 years is that digital [recording systems] has gotten as good as analog,” Rychard Cooper said, the media production specialist/audio technician at California State University, Long Beach (CSULB). “Now it can really capture all of the nuances of analog audio and synthesis.”

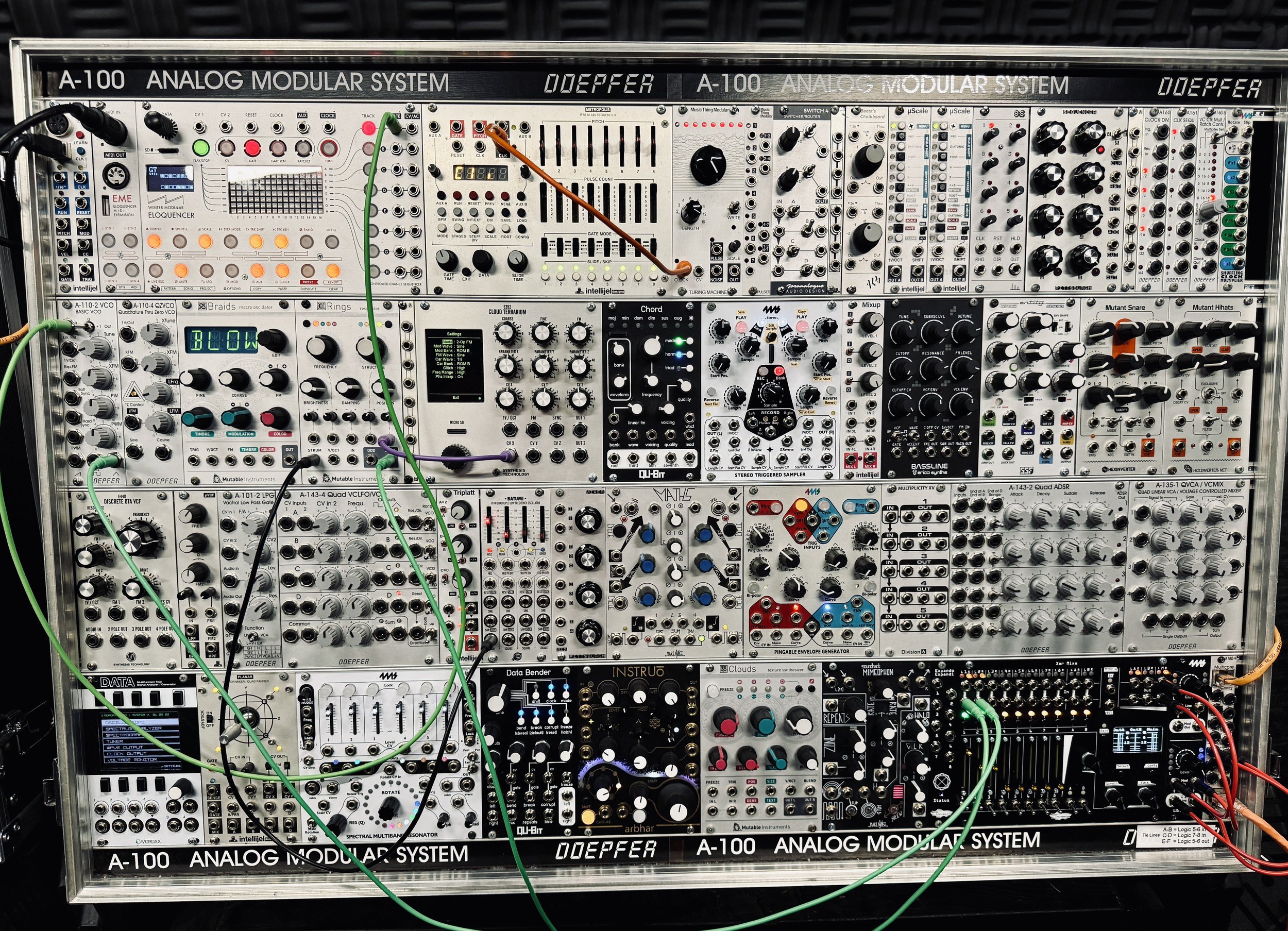

A-100 Analog Modular System. Photo by Nate Martinez.

Prior, analog recording systems were the go-to for producers and audio engineers due to their superior ability to produce sounds. They can replicate sound naturally in its truest form, whereas digital systems do so artificially, leaving room for error.

Cooper explained that while using analog recording systems was the best way to achieve the highest quality sound, its one weakness was convenience. This is where digital systems came in to play, as they did not require an entire studio room full of equipment. This created an analog versus digital divide among producers and audio engineers. Up until now.

“Where is the quality of digital reproduction better than we can perceive? I think we’re already there with audio where we can already reproduce things so accurately that the human hearing mechanism is actually the weak link in that chain,” Cooper said. “So I think we’re probably maxed out as far as audio goes.”

One example of this maxed out quality can be heard in hip-hop with the use of Auto-Tune. In the mid-2000s, T-Pain was one of the first artists to popularize its use in hip-hop and R&B. What made his music so refreshing and enjoyable at the time was his bold and obvious use of Auto-Tune.

Fast forward to today, nearly every hip-hop artist uses the pitch-correcting technology. The only difference is they can now adjust the degree to which listeners can actually tell, or change the sound of their voices entirely.

Live coding process. Photo courtesy of Álvaro Cáceres.

Artists like Travis Scott and Kid Cudi to name a few have largely become as popular as they are now due to their unique use of Auto-Tune. Both Scott and Cudi have carefully engineered their distinct Auto-Tune imbued humming sounds, but can switch back to their “natural” voices at any time. Only their natural voices are not really natural at all and still use Auto-Tune. It’s just that much harder to tell now that digital music systems are so advanced.

Another offspring of the improvements in music technology is the technique called live coding, which combines computer science and music production.

“Live coding is improvising music or visuals using programming code,” Álvaro Cáceres said, a CSULB music composition grad student originally from Pinto, Spain.

Generally, coding is thought of as giving a computer commands in order to complete a task, but Cáceres pushes back against that notion.

“Usually, when I’m doing electronic music in particular, I feel like rather than me trying to control an instrument, it feels like I’m establishing a dialogue with a piece of human knowledge,” Cáceres explained about his experience with live coding. “I’m trying to shift the focus from control to communication and interfacing.”

Cáceres using an analog system. Photo by Nate Martinez.

It’s hard to imagine how music technology could develop any further, but according to Cáceres the evolution is not complete. The rapid advancements of artificial intelligence have allowed for the technology to extend its tentacles into nearly every field imaginable, including music.

“I’m pretty sure that the workflow of making music is going to be very different and it’s going to involve mediation with artificial intelligence, both when it comes to songwriting…and then in the production process,” Cáceres said.

With the involvement of AI, this begs the question of whether music is endanger of losing its precious human element. As advanced as the technology is, it’s no Beethoven. Cáceres however believes that AI is not a threat and that it will allow for musicians to focus on “higher level elements of music making.

“I don’t think it’s going to detract from music as a craft, it’s just going to transform it,” Cáceres said. “But it’s been transforming ever since music started. From the moment we started using instruments other than our bodies to the moment that we started using machines, electricity, synthesis and electronic music. It just keeps evolving.”